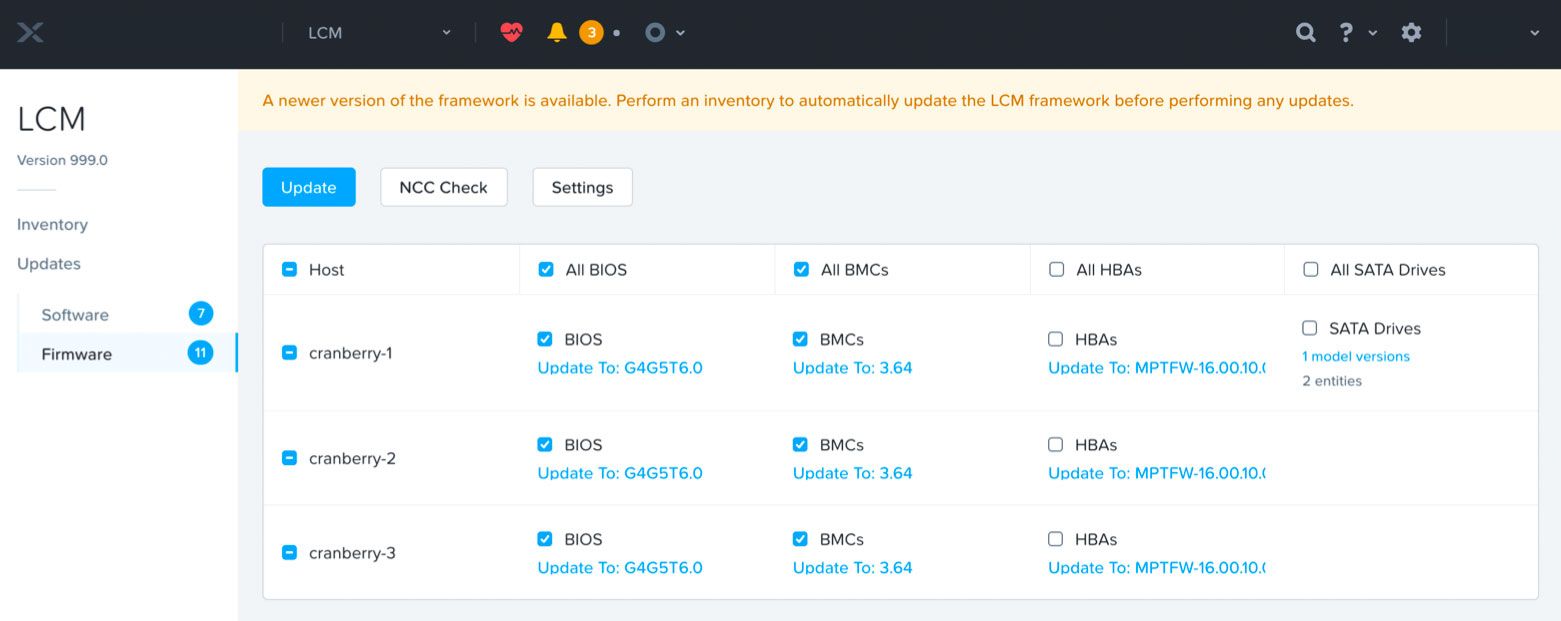

From time to time, the unexpected can happen. Several weeks ago, I was working with the Life Cycle Manager to perform firmware updates. While LCM was in progress, it attempted to update the firmware on a disk, and simultaneously the disk failed. Thankfully, LCM detected the failure and stopped the rolling firmware upgrade. Unfortunately, that left the cluster without resiliency. From this point, there are several steps you must take to get the cluster back to a healthy state.

- The first thing I noticed was the AHV node was stuck in Phoenix. To exit, I ran the following command:

python /phoenix/reboot_to_host.py2. After the host rebooted, I took a look at the CVM status. I determined the the CVM was in maintenance mode. To check the status of the cvm's, use the ncli host list command via ssh to the cluster IP. To exit maintenance mode, use the following command. A detailed post can be found here.

nutanix@zzz:~$ ncli host edit id=<host id> enable-maintenance-mode=false3. Now that the cluster status is looking better, we need to take a look at the AHV node. A review of the acli host.list command showed the AHV node was still in maintenance mode. To exit the host, from any cvm, I used the following command. A detailed post can be found here.

nutanix@zzz:~$ acli host.exit_maintenance_mode <hypervisor_ipaddress>4. At this point, Nutanix kicked off a disk removal operation to ensure the replication factor was met by copying data to other disks in the cluster. I waited for this operation to complete. I monitored the task progress from the prism element interface.

5. Once the removal was completed, I ran the a complete NCC health check to see what else was remaining and if the cluster was in a good state.

nutanix@zzz:~$ ncc health_checks run_all6. After validating the cluster was healthy, and resiliency was OK, I attempted to perform the rest of the updates. During the pre-checks, I received an error that foundation service was running in the cluster.

test_foundation_service_status_in_cluster

to resolve this error, we must first determine what node is still running the service.

nutanix@zzz:~$ allssh "genesis status | grep foundation"

================== 10.27.2.31 =================

foundation: []

================== 10.27.2.33 =================

foundation: []

================== 10.27.2.34 =================

foundation: [9923,9958,9967]

================== 10.27.2.32 =================

foundation: []Once you have identified the cvm with foundation services running, use ssh to connect to that cvm, and stop the service.

nutanix@zzz:~$ genesis stop foundationNutanix KB 5599 has more information on this precheck.

Thankfully, that was it. I am looking forward to the future of LCM and new error handling functionality.